The landscape of Artificial Intelligence has shifted. If 2023 was the year of the “Chatbot” and 2024 was the year of “RAG” (Retrieval-Augmented Generation), then 2026 is officially the year of the large language model agentic workflow.

We are moving past the era where users provide a prompt and hope for a static text response. Today, businesses and developers are building autonomous systems where the AI handles complex, multi-step projects with minimal human oversight. This shift from “passive AI” to “active agents” is what defines the modern large language model agentic workflow.

🚀 Executive Summary: The Agentic Revolution

By 2026, the AI landscape has shifted from passive chatbots to the large language model agentic workflow. This evolution marks the rise of autonomous AI agents capable of independent planning, Chain-of-thought (CoT) reasoning, and real-time multi-agent coordination. No longer confined to text generation, these systems leverage Agentic RAG and specialized tools to execute complex, multi-step business processes. As enterprise-wide AI adoption matures, the focus moves from simple automation to “Cognitive Workflow Intelligence.” This guide explores how to architect these resilient, self-correcting systems while maintaining a vital human-in-the-loop for strategic oversight. 🌐

1. What is a Large Language Model? (The 2026 Perspective)

To understand the workflow, we must first define the engine. A Large Language Model (LLM) is a sophisticated neural network trained on trillions of tokens. By 2026, these models have evolved beyond simple text prediction into “reasoning engines.”

The core architecture, based on the Transformer model, allows the AI to understand context through “attention mechanisms.” However, a standalone LLM is like a genius in a dark room—brilliant, but with no way to interact with the outside world. To make it useful, we wrap it in a large language model agentic workflow.

2. Defining the Large Language Model Agentic Workflow

A large language model agentic workflow is a design pattern where the AI is given a goal, rather than a specific set of instructions. The model then uses an iterative loop of reasoning, tool use, and self-correction to achieve that goal.

Unlike traditional software that follows a linear if-then logic, an agentic workflow is dynamic. It can decide which tool to use, realize it made a mistake, and try a different path without the user ever hitting “enter” twice.

The Agentic vs. Non-Agentic Divide

| Feature | Standard LLM Prompting | Large Language Model Agentic Workflow |

| Interaction | Single turn (Prompt -> Response) | Multi-turn, iterative loops |

| Autonomy | Human must verify and fix errors | AI self-reflects and self-corrects |

| Capability | Limited to text/knowledge | Can use browsers, APIs, and terminals |

| Example | “Write a summary of this link.” | “Find 10 leads, email them, and update my CRM.” |

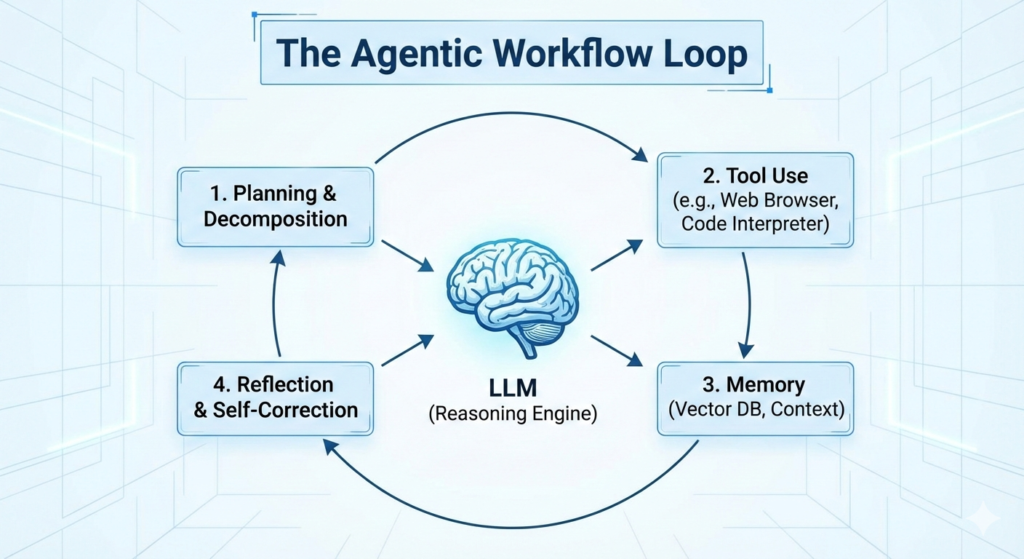

3. The Four Pillars of an Agentic Workflow 🏗️

To build a successful large language model agentic workflow, four architectural pillars must be present. These pillars transform a static model into an autonomous agent.

I. Planning and Decomposition

When a user provides a complex request, the agent doesn’t jump to the finish line. It breaks the task into sub-goals.

Chain of Thought (CoT): The model explains its reasoning step-by-step.

Sub-goal Decomposition: Breaking a “Market Research” task into: 1. Search, 2. Filter, 3. Summarize, 4. Format.

II. Tool Use (The “Hands” of the AI)

This is where the large language model agentic workflow becomes powerful. The LLM is given a “tool belt” consisting of:

Web Browsers: To get real-time 2026 data.

Code Interpreters: To run Python scripts for data visualization.

API Connectors: To talk to Slack, Salesforce, or GitHub.

III. Memory (Short-term & Long-term)

For an agent to complete a three-hour task, it needs to remember what it did in hour one.

Short-term: Stored in the context window.

Long-term: Stored in Vector Databases (like Pinecone or Milvus) using RAG.

IV. Reflection and Self-Correction

This is the “Secret Sauce.” After an LLM performs a task, the workflow asks the model: “Is this answer correct? Does it meet the user’s safety constraints?” If the answer is no, the agent goes back to step one.

4. Real-World Example: The Autonomous Developer 💻

The most common application of a large language model agentic workflow in 2026 is software engineering. Let’s look at how an agentic system like “Devin” or “OpenDevin” handles a bug report.

Ingestion: The agent receives a GitHub issue: “Login button is broken on mobile.”

Exploration: The large language model agentic workflow triggers a “File Search” tool to find the

Login.jsandMobileStyles.cssfiles.Hypothesis: The AI reasons: “The padding might be overlapping the button on screens smaller than 400px.”

Execution: The agent writes a fix in a sandbox environment.

Testing: It runs a headless browser test. The test fails.

Self-Correction: The AI realizes it edited the wrong CSS class. It reverts the change and tries again.

Submission: Once the test passes, it submits a Pull Request.

This is the power of the large language model agentic workflow—it reduces the “human-in-the-loop” requirement by 80%.

5. Implementation: CrewAI, LangGraph, and AutoGen

If you are a developer looking to build a large language model agentic workflow in 2026, you aren’t starting from scratch. Several frameworks have emerged as industry leaders.

1. CrewAI: Role-Based Agents

CrewAI allows you to define a “Crew” of agents with different roles (e.g., a “Researcher” and a “Writer”). They pass tasks back and forth until the project is done.

2. LangGraph: Cyclic Graphs

Created by the LangChain team, LangGraph is the gold standard for building a large language model agentic workflow that requires loops. It allows you to define “nodes” (actions) and “edges” (paths) that can go backward if a condition isn’t met.

3. Microsoft AutoGen

AutoGen focuses on multi-agent conversation. It’s perfect for complex brainstorming or scenarios where one agent acts as a “Legal Reviewer” and another as a “Creative Director.”

External Resource: Explore the official LangGraph documentation to see how to build cyclic agentic workflows.

6. The Impact on Business and Millions of People 🌍

Why does the large language model agentic workflow matter to the average person? Because it changes the cost of labor.

Healthcare Example

In 2026, a medical agentic workflow can:

Scan a patient’s historical records.

Check for drug interactions using a pharmaceutical database.

Draft a pre-consultation report for the doctor.

Schedule the follow-up appointment automatically.

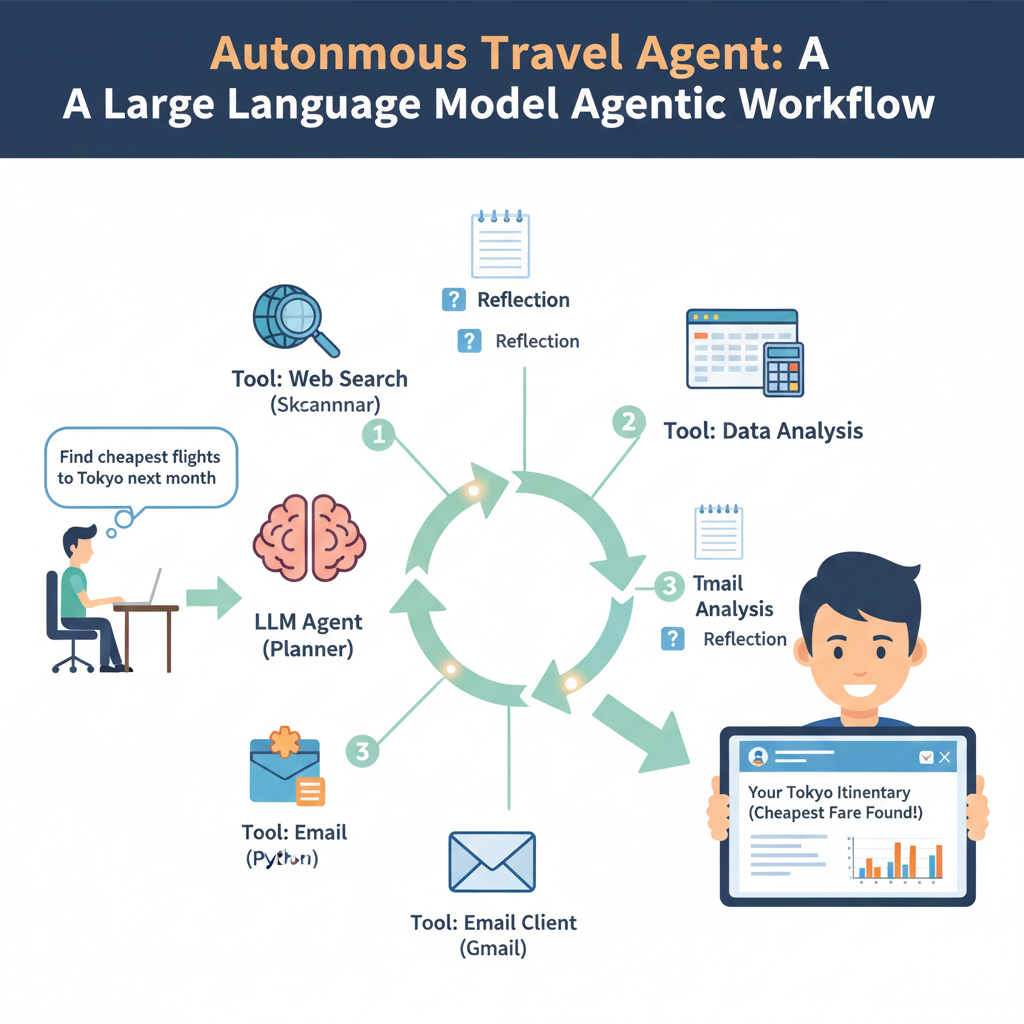

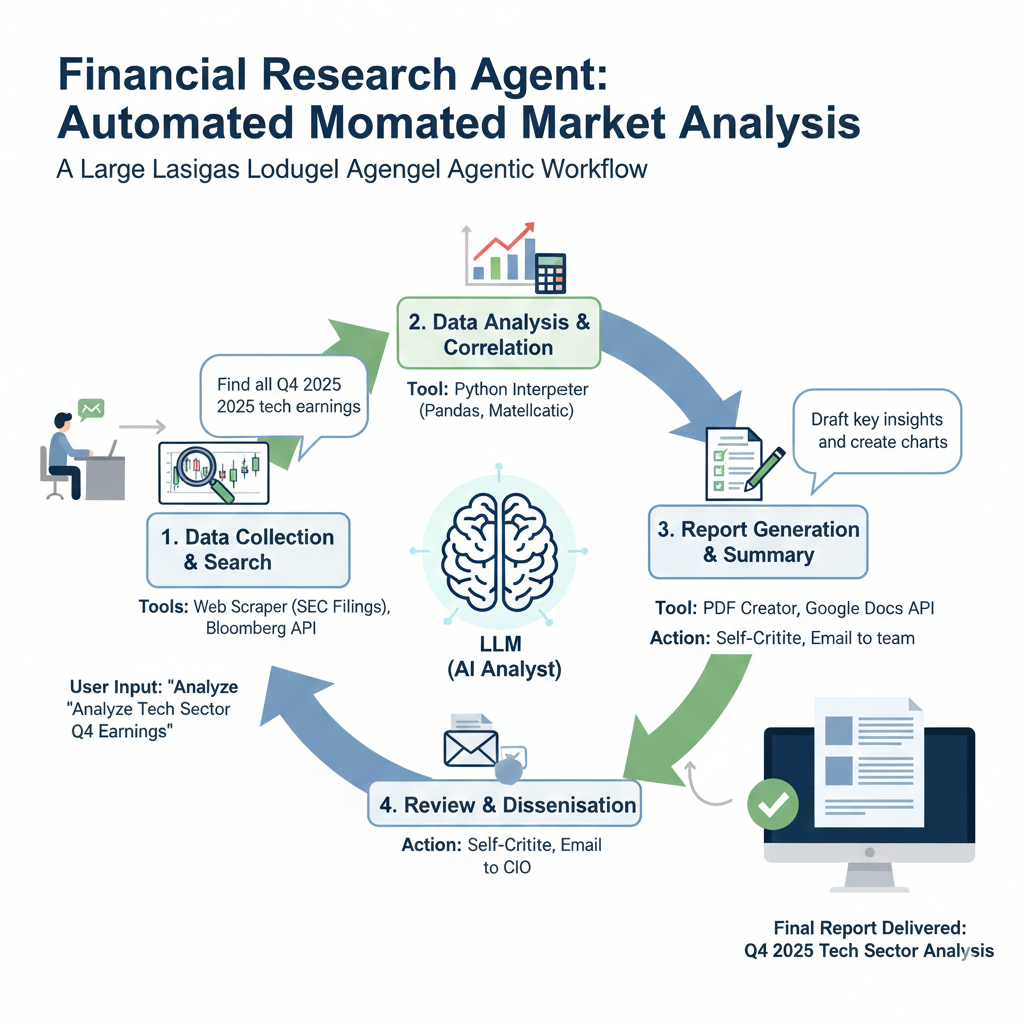

Financial Research Example

An analyst can use a large language model agentic workflow to monitor 500 different stock tickers. Instead of just sending alerts, the agent can write a 10-page analysis of why a stock is moving, citing SEC filings and social media sentiment in real-time.

7. Challenges and Ethics in 2026 ⚖️

Despite the efficiency of the large language model agentic workflow, we face new challenges:

Agentic “Loops of Death”: If not properly constrained, an agent might get stuck in a loop, spending thousands of dollars in API credits by repeating the same failed task.

Security: Giving an LLM the ability to “run code” or “delete files” is inherently risky. “Prompt Injection” attacks become much more dangerous in an agentic environment.

The “Black Box” Problem: As workflows become more complex, understanding why an agent made a specific decision becomes harder for human auditors.

External Resource: Read the OpenAI Safety Guidelines regarding the deployment of autonomous agents.

8. Looking Ahead: The Agentic Economy

As we move deeper into 2026, the large language model agentic workflow will move from our desktops to our physical world. With the rise of humanoid robotics and IoT, agentic workflows will soon control how warehouses are managed and how self-driving logistics operate.

The skill of the future isn’t just “prompt engineering”—it is Agentic Design. It is the ability to architect the flow of information, tools, and feedback loops that allow AI to work for us.

Summary Checklist for a Successful Workflow:

[ ] Does the agent have a clear goal?

[ ] Does the agent have access to the right tools?

[ ] Is there a reflection step to catch hallucinations?

[ ] Is there a human-in-the-loop for high-stakes decisions?

Conclusion

The large language model agentic workflow is the definitive technology of 2026. By combining the linguistic brilliance of LLMs with the structured execution of agentic loops, we are unlocking a level of productivity never before seen in human history. Whether you are a developer, a business owner, or a curious observer, understanding these workflows is your key to the future of AI.

🤖 Frequently Asked Questions

Q1: What exactly is a large language model agentic workflow? A: A large language model agentic workflow is a system where an AI acts as an “agent” to complete complex goals. Instead of just giving a single answer, the workflow allows the AI to plan steps, use tools (like a browser or code editor), and self-correct its work until the task is finished. 🔄

Q2: How does a large language model agentic workflow differ from a normal chatbot? A: A normal chatbot is “linear”—you ask a question, and it gives a response. A large language model agentic workflow is “iterative.” It can work in loops, verify its own data, and interact with external software APIs to execute real-world actions without human help. 🛠️

Q3: Why is a large language model agentic workflow better for businesses in 2026? A: It drastically reduces manual work. While a basic AI can draft an email, a large language model agentic workflow can research a lead, write the email, send it through your CRM, and schedule a follow-up if there is no reply. It moves AI from a “writer” to a “worker.” 📈

Q4: Do I need to be a coder to use a large language model agentic workflow? A: While developers use frameworks like LangGraph or CrewAI, many “no-code” platforms are now integrating large language model agentic workflow features. This allows non-technical users to build autonomous agents by simply defining the goals and the tools the agent is allowed to use. 👩💻

Q5: Are there risks to using a large language model agentic workflow? A: The main risks involve “infinite loops” and security. Because a large language model agentic workflow can run autonomously, it is important to set spending limits on API usage and provide “human-in-the-loop” checkpoints for high-stakes financial or legal decisions. 🛡️